容器GPU运行时配置完整指南

1. 安装 Docker 和 Docker Compose

# 更新系统包索引

sudo apt update

# 安装必要的依赖包

sudo apt install -y \

apt-transport-https \

ca-certificates \

curl \

gnupg \

lsb-release

# 添加 Docker 官方 GPG 密钥

curl -fsSL https://download.docker.com/linux/ubuntu/gpg | sudo gpg --dearmor -o /usr/share/keyrings/docker-archive-keyring.gpg

# 添加 Docker 官方仓库

echo \

"deb [arch=$(dpkg --print-architecture) signed-by=/usr/share/keyrings/docker-archive-keyring.gpg] https://download.docker.com/linux/ubuntu \

$(lsb_release -cs) stable" | sudo tee /etc/apt/sources.list.d/docker.list > /dev/null

# 更新包索引并安装 Docker

sudo apt update

sudo apt install -y docker-ce docker-ce-cli containerd.io

# 启动 Docker 服务并设置开机自启

sudo systemctl start docker

sudo systemctl enable docker

# 将当前用户添加到 docker 组(避免每次使用 sudo)

sudo usermod -aG docker $USER

# 注意:需要重新登录或执行 newgrp docker 使组权限生效

# 安装 Docker Compose

sudo curl -L "https://github.com/docker/compose/releases/latest/download/docker-compose-$(uname -s)-$(uname -m)" -o /usr/local/bin/docker-compose

sudo chmod +x /usr/local/bin/docker-compose

# 验证安装

docker --version

docker-compose --version

2. 配置 NVIDIA 容器工具包

# 确保已安装 NVIDIA 驱动

nvidia-smi

# 添加 NVIDIA 容器工具包仓库

curl -fsSL https://nvidia.github.io/libnvidia-container/gpgkey | \

sudo gpg --dearmor -o /usr/share/keyrings/nvidia-container-toolkit-keyring.gpg

curl -s -L https://nvidia.github.io/libnvidia-container/stable/deb/nvidia-container-toolkit.list | \

sed 's#deb https://#deb [signed-by=/usr/share/keyrings/nvidia-container-toolkit-keyring.gpg] https://#g' | \

sudo tee /etc/apt/sources.list.d/nvidia-container-toolkit.list

# 更新包索引并安装 NVIDIA 容器工具包

sudo apt update

sudo apt install -y nvidia-container-toolkit

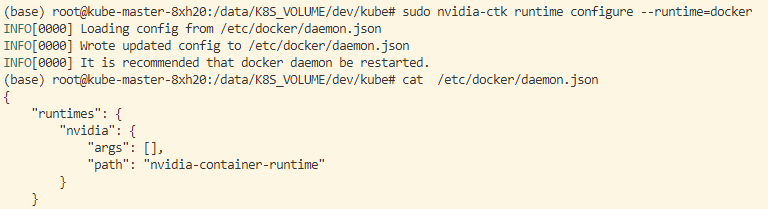

# 配置 Docker 支持 GPU

sudo nvidia-ctk runtime configure --runtime=docker

# 重启 Docker 服务使配置生效

sudo systemctl restart docker

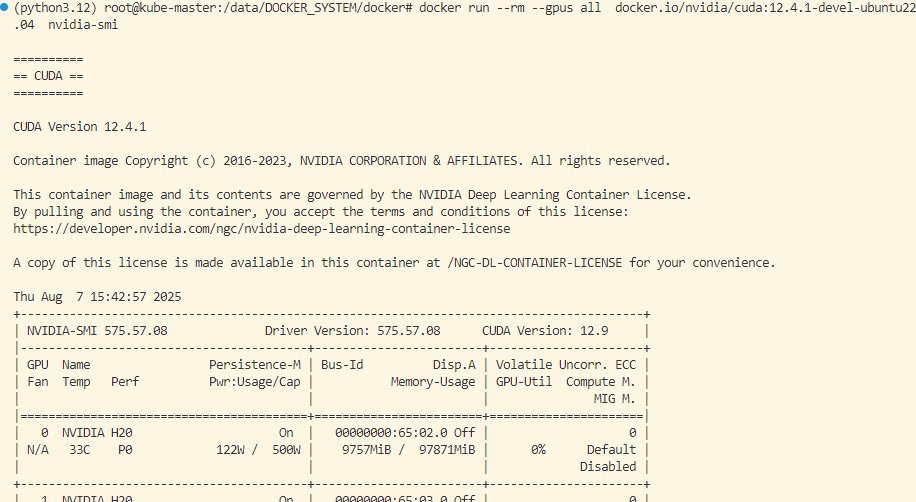

3. 验证 GPU 支持

# 验证 NVIDIA 驱动是否正常工作

nvidia-smi

# 拉取 CUDA 测试镜像

docker pull nvidia/cuda:12.4.1-cudnn-devel-ubuntu22.04

# 测试 Docker GPU 支持

docker run --rm --gpus all nvidia/cuda:12.4.1-cudnn-devel-ubuntu22.04 nvidia-smi

4. Docker Compose GPU 配置

4.1 基础 GPU 配置

创建 docker-compose.yml 文件:

version: '3.8'

services:

gpu-service:

image: nvidia/cuda:12.4.1-cudnn-devel-ubuntu22.04

command: nvidia-smi

deploy:

resources:

reservations:

devices:

- driver: nvidia

count: all # 使用所有 GPU

capabilities: [gpu]

# 指定特定 GPU

specific-gpu-service:

image: nvidia/cuda:12.4.1-cudnn-devel-ubuntu22.04

command: nvidia-smi

deploy:

resources:

reservations:

devices:

- driver: nvidia

device_ids: ['0'] # 仅使用 GPU 0

capabilities: [gpu]

# 使用多个指定 GPU

multi-gpu-service:

image: nvidia/cuda:12.4.1-cudnn-devel-ubuntu22.04

command: nvidia-smi

deploy:

resources:

reservations:

devices:

- driver: nvidia

device_ids: ['0', '1'] # 使用 GPU 0 和 1

capabilities: [gpu]

4.2 传统配置方式(兼容性更好)

version: '3.8'

services:

gpu-service:

image: nvidia/cuda:12.4.1-cudnn-devel-ubuntu22.04

command: nvidia-smi

runtime: nvidia

environment:

- NVIDIA_VISIBLE_DEVICES=all

- NVIDIA_DRIVER_CAPABILITIES=compute,utility

# 指定特定 GPU

specific-gpu-service:

image: nvidia/cuda:12.4.1-cudnn-devel-ubuntu22.04

command: nvidia-smi

runtime: nvidia

environment:

- NVIDIA_VISIBLE_DEVICES=0 # 仅使用 GPU 0

- NVIDIA_DRIVER_CAPABILITIES=compute,utility

5. GPU 资源隔离和限制

5.1 GPU 显存资源竞争问题

⚠️ 重要警告:当多个容器共享同一张 GPU 时,会出现显存竞争问题,可能导致以下错误:

CUDA error: operation not permitted

CUDA kernel errors might be asynchronously reported at some other API call, so the stacktrace below might be incorrect.

For debugging consider passing CUDA_LAUNCH_BLOCKING=1

Compile with 'TORCH_USE_CUDA_DSA' to enable device-side assertions

这个问题是在两个ComfyUI容器显存竞争时候发现的,类比其他应该也是一样的,为了解决在单个卡显存分割和利用率分割,下一部分将引入 kubernetes 和 hami 插件 进行资源调度。

更详细内容查看

独立博客 https://www.dataeast.cn/

CSDN博客 https://blog.csdn.net/siberiaWarpDrive

B站视频空间 https://space.bilibili.com/25871614?spm_id_from=333.1007.0.0

关注 “曲速引擎 Warp Drive” 微信公众号